How ChatGPT works? Explained for ANYONE to understand.

12/9/2025

When ChatGPT came out, I was in my final year of University (I was at quite a prestigious one in the UK) studying Computer Science - like the little nerd I am. Anyway, while I was making ChatGPT explain lecture notes like it was an Eminem rap, it seemed like every other student in my cohort was discussing how it worked. They went on about “hyper parameters”, “weightings”, “models” and all these terms that I had never heard of before. But the one term that I could not avoid hearing was: “LLM”.

What the f*ck is an LLM?

That was 3 years ago now. And honestly, at the time, no part of me gave a toss about learning about any of it... until a month ago. I’ve studied Computer Science since I was 13, how do I not know how ChatGPT (or an “LLM”) works? I felt a bit insecure to be honest. So I began researching, and even with my advanced Computer Science knowledge, it took a while to wrap my head around it all. Every damn article, blog and video explaining LLMs would just throw these advanced terms at you and just expect you to know them.

And so we arrive here. I aim to break the trend and explain at the highest level how ChatGPT works. I’m not here to bombard you with maths, technical terms or complex theories - just plain old English.

What does ChatGPT actually do?

Before we go into HOW ChatGPT answers your question, I think it’s best to understand what it’s actually doing when you ask it a question.

Does it simply do a google search with your question and copy text from the first 3 websites?

Does it have its own digital brain, loaded with data, and uses its vast knowledge to answer?

In fact there was a conspiracy I heard when ChatGPT first came out that stated OpenAI simply hired cheap labour and assigned each to a ChatGPT user. When the user asked a question, this guy behind the session would simply find them their answer as quick as possible.

Though quite interesting, none of these are how ChatGPT works.

At its core ChatGPT is doing one thing: It’s answering the question ”Given some text, predict what the next piece of text should be?”Okay walk with me here, If I input “The cat sat on the” to ChatGPT.

ChatGPT is programmed to answer “what piece of text should MOST LIKELY be next?”, in which case, it will probably decide on “mat” - or something else along those lines.

That’s it, nothing else. It doesn’t think, it just predicts.

How does it predict?

Step 1 - Tokenization

The first thing to clear up, computers don’t have brains. They don’t understand words, they understand numbers. So when I input “Hello ChatGPT”, it needs to be translated into a language the computer understands - the language of numbers! (That was a bit corny - I apologise)

For literally every word/phrase you can think of, ChatGPT has a unique number for it:

“Hello ChatGPT” -> [“Hello”] = 123, [“ChatGPT”] = 456 -> [123, 456]

These numbers that represent the words/phrases are called “Tokens”.

Step 2 - Transformer

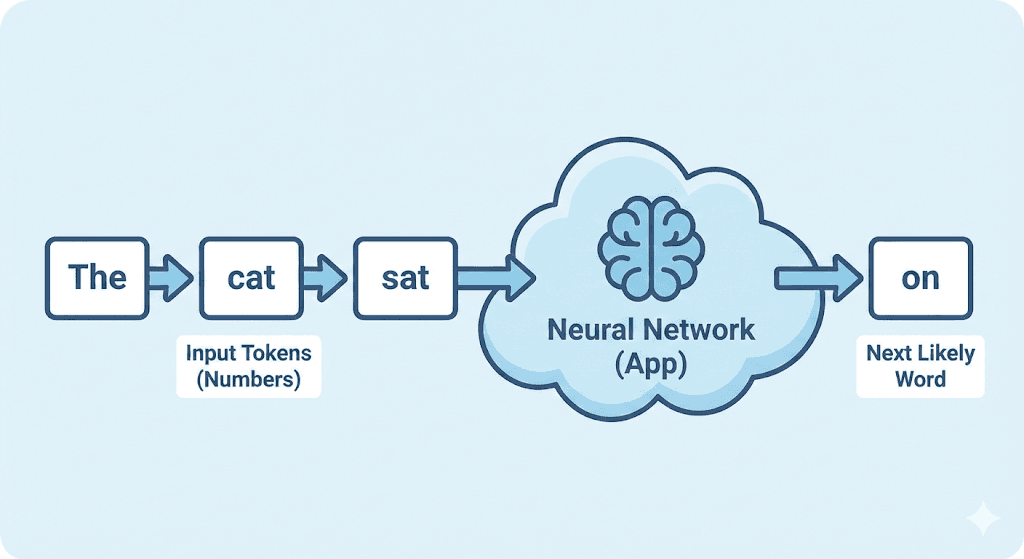

Now this is where most people with no technical background will get lost in most tutorials. So to prevent that from happening here, I’m going to give a very, VERY, basic description about what happens at this stage. There’s this thing in the Machine Learning world called a Neural Network, it’s supposed to be like an artificial brain, just think of it as an app. You don’t need to know about how it works, all you should really know is what it does: It takes in as input a list of tokens (which are just numbers representing words) and it outputs a word that is most likely supposed to be next - THATS IT. Hopefully the diagram below (generate with AI by the way) gives you an idea of this:

But obviously ChatGPT doesn’t just give you one worded responses. After the Neural Network (or Transformer) gets the next likely word, if it didn’t predict that it was the end of the sentence, it will simply add the word it just predicted to the input tokens and put it through the transformer again. So in the example above, it would input “The cat sat on” into the neural network and get the next word.

Step 3 - Training

To be honest, this is actually the very first step, but it answers the question: “How does the neural network predict the next likely word”?

Again, I want to keep this as simple as possible. Essentially, the guys at OpenAI that made ChatGPT exposed the neural network to billions of texts from the internet. You might have heard the term “training”. This is exactly that. The neural network has seen an insane, outrageous, stupid amount of text, so when it sees your question/input, it simply recalls similar patterns of text and determines what it most often saw come after that pattern. If you ask ChatGPT: what colour is the sky? The internet probably has millions of text referring to “The sky is blue”, “Blue sunny sky”, “Clear blue sky”, so it would not be difficult for the network to predict the next word to be “blue”.

Conclusion

I have to end every blog saying this, but it is important: this was a very VERY basic breakdown of what is happening. If you followed along and want to learn more, I’d suggest going on YouTube and watching someone like Andrej Karpathy, who has 3 hour long videos detailing all the details about ChatGPT. There are tons of resources out there explaining in detail about how these models work. Hopefully, this blog gave you some good legs to stand on to tackle understanding the more intricate details.